MPI学习--并行系统和并行模式

这里主要总结并行系统的分类和几种并行编程模式,都是些概念。

当然现在的目的还是为了学习MPI,不过事实上MPI并不能完全的算作是理想意义上的并行编程模型,它只是提供了一种粗粒度的并行机制,而在进程的粒度上看,MPI程序仍是并行的。

并行系统

Flynn分类法 – 按指令/数据模式的分类

从处理器指令级别的并行来考察的话,Flynn依据指令和数据流的不同给出计算机的分类,例如SIMD和MIMD。

关于什么是单指令多数据流和多指令多数据流,我在quora上面搜到了个不错的解释:

SIMD and MIMD are types of parallel architectures identified in Flynn’s taxonomy, which basically says that computers have single (S) or multiple (M) streams of instructions (I) and data (D), leading to four types of computers: SISD, SIMD, MISD, and MIMD. Let’s skip SISD and MISD, since the question doesn’t ask about them, and neither is spectacularly interesting.

Single Instruction Multiple Data (SIMD) means that all parallel units share the same instruction, but they carry it out on different data elements. The idea is that you can, say, add the arrays [1,2,3,4] and [5,6,7,8] element-wise to obtain the array [6,8,10,12] in one big whoop: for this, there have to be four arithmetic units at work, but they can all share the same instruction (here, “add”), and work by all performing the same actions in tight, lock-step synchronicity. This usually means putting multiple data-manipulation thingies inside the same processing core as one instruction decoder, for the sake of the tight timekeeping.

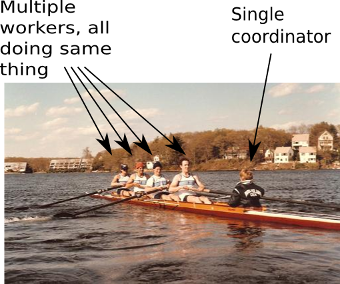

Here’s a picture of a SIMD kind of sports teamwork, for illustration:

Multiple Instruction Multiple Data (MIMD) means that parallel units have separate instructions, so each of them can do something different at any given time; one may be adding, another multiplying, yet another evaluating a branch condition, and so on. This is the sort of parallelism you get with threads, which basically let programs dispatch an entire function call to run on a different processor. This means involving multiple fully-featured, independent processing cores, whether they are on the same chip (multi-core), different ones (multi-processor), or a mixture of the two.

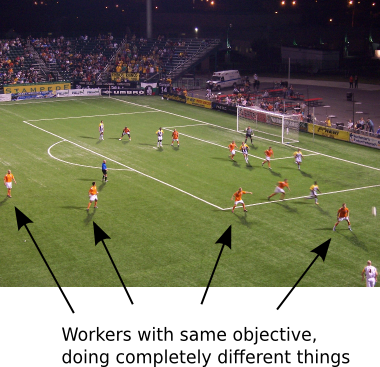

Here’s a picture of a MIMD kind of sports teamwork, for illustration:

接着上面,随着体系结构的发展,MIMD体系有进一步划分为:

- 多计算机多地址空间消息传递计算系统

- 分布式多计算机系统

- 集中式多计算机系统

- 多计算机单地址空间共享存储计算系统

- 分布存储的共享存储系统

- 集中存储的共享存储系统

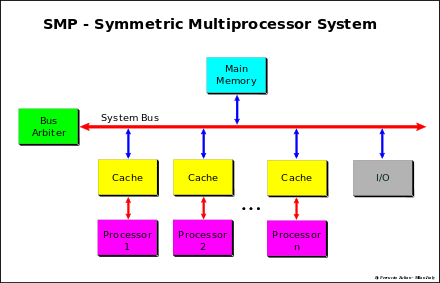

对称多处理器系统(SMP)

典型的,只一个系统(节点)内多个处理器共享内存的形式, 各处理器具等的战友总线和内存访问机会。广义上,多核处理器就是出于这一范畴。在一个节点内部可多线程或者多进程的执行并行作业。多线程的话就是通过隐式通信,多进程则应使用某种特殊机制。

集群计算机系统

从分类上看,可以将集群计算机归属于分布式存储的MPMD/MIMD机型。采用自制的SIMD计算机或者SMP计算机为节点,通过高速网络互联组织。

向量机

现代体系结构设计已经把向量操作指令集集成到了处理器硬件逻辑中,有些SIMD指令也支持向量操作。在向量机节点或以向量机为节点组成的集群系统中也能支持MPI规范。

并行编程模式

隐式并行

借助编译器和运行时环境的支持发掘程序并行性,对穿行程序进行并行化,关键是分心程序的数据相关和控制相关,如果存在相关性则需要采用转换技术(重构/优化)将其删除。话说上次的MIC培训其实就是在将这些优化策略。

数据并行

数据并行依靠所处理数据集合无关性,借助数据规划分来驱动程序之间的并行执行。该类型的并行主要强调局部计算和数据划分,执行流程之间的同步需要在编译阶段加以显式规划。

消息传递

消息传递模型可通过如下几个概念加以定义:

- 一组仅有本地内存空间的进程;

- 进程之间通过发送和接受消息进行通信;

- 进程之间需要使用协同操作完成数据传递,如发送操作必须要求有与之配对的接受操作。

消息传递模型的主要缺点:要求在编程过程中参与显示的数据划分和进程间同步,因此会需要在解决数据依赖和预防死锁等问题上花费很大精力。